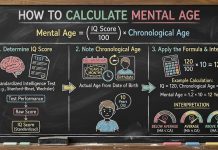

How to Calculate Mental Age Using This Simple Proven Method

The measurement of human intelligence has largely been a pursuit of quantifying the intangible. Among the most enduring and controversial metrics emerging from this century-long scientific endeavor is the concept of “mental age.” Originally conceived in the burgeoning laboratories of early 20th-century French psychology, mental age (MA) began as a pragmatic solution to a bureaucratic problem: classifying schoolchildren for appropriate educational placement. Today, however, the term occupies a bifurcated reality. In clinical neuropsychology and psychometrics, it represents a specific, historically bounded developmental metric used primarily in the assessment of intellectual disability and adaptive functioning. Conversely, in the consumer technology sector and popular consciousness, “brain age” or mental age has been rebranded as a dynamic, malleable score—a gamified target for self-improvement apps and digital cognitive training platforms.

This report endeavors to provide an exhaustive analysis of the mental age construct, tracing its lineage from the pioneering scales of Alfred Binet to the machine-learning algorithms of modern structural neuroimaging. It seeks to disentangle the valid psychometric applications of the concept from the commercialized simplifications that proliferate in the digital age. Furthermore, it examines the profound shift in diagnostic frameworks—from the rigid mathematical ratios of the early 1900s to the nuanced, multidimensional assessments of the DSM-5 and ICD-11. By synthesizing historical data, contemporary clinical standards, and cutting-edge neurobiological research, this document aims to serve as a definitive reference on the calculation, interpretation, and validity of mental age in all its iterations.

2. The Psychometric Genesis: Binet, Simon, and the Quantification of Development

To understand the mechanics of mental age calculation, one must first appreciate the intellectual climate in which it was born. The late 19th century was dominated by anthropometric approaches to intelligence. Figures like Samuel Morton and Paul Broca sought to correlate intellectual capacity with physical attributes, specifically cranial volume. The underlying assumption was biological determinism: the size of the “hardware” dictated the capacity of the “software.” However, these physical measurements proved to be poor predictors of academic success or cognitive adaptability. The field required a shift from the structural to the functional.

2.1 The Binet-Simon Scales (1905–1911)

In 1904, the French Ministry of Public Instruction commissioned Alfred Binet and his colleague Theodore Simon to create a method for identifying children who were struggling in standard Parisian classrooms and required “special education”. Binet’s radical insight was to abandon the search for a single, fixed biological marker of intelligence. Instead, he conceptualized intelligence as a complex interplay of faculties—judgment, practical sense, initiative, and the ability to adapt to circumstances.

Binet and Simon empirically observed that intelligence is not static but developmental; it grows with chronological age during childhood. They reasoned that if one could identify the tasks that a “typical” child of a specific age could perform, one could measure an individual child’s development against this normative benchmark.

The 1905 Binet-Simon Scale was the first instrument to operationalize this theory. It consisted of 30 tasks of increasing difficulty. These ranged from simple sensory tests (following a moving light with the eyes) to complex verbal abstractions (defining the difference between “esteem” and “affection”).

- The 1908 Revision: This iteration introduced the grouping of tasks by age levels. Binet determined, through testing hundreds of children, that specific tasks could be passed by 50-75% of children at a certain age.

- The 1911 Revision: Further refined the age placement of tasks and extended the scale up to adulthood.

Crucially, Binet did not view the “mental age” score as a permanent label. He explicitly warned against the “brutal pessimism” of those who saw intelligence as a fixed quantity. He believed that with “mental orthopedics”—targeted exercises to improve attention and discipline—a child’s mental age could be increased. This perspective is historically significant as it presages the modern “brain training” movement, suggesting a century-long continuity in the desire to actively manipulate cognitive development.

2.2 The Transatlantic Adaptation: Terman and the Stanford-Binet

While Binet created the tool, it was American psychologists who industrialized it. Lewis Terman at Stanford University recognized the utility of Binet’s method but found the French norms inapplicable to American children. In 1916, Terman published the Stanford-Binet Intelligence Scale, a rigorous standardization involving a large sample of American participants.

Terman’s revision was monumental for several reasons. First, it extended the test’s range and precision. Second, it institutionalized the calculation of the Intelligence Quotient (IQ). The concept of IQ had been proposed by German psychologist William Stern in 1912. Stern argued that the simple difference between Mental Age (MA) and Chronological Age (CA) (e.g., $MA – CA$) was misleading. A 2-year lag is far more significant for a 4-year-old than for a 14-year-old. Stern proposed a ratio to standardize this discrepancy.

Terman adopted Stern’s ratio for the Stanford-Binet, creating the famous formula:

$$IQ = \frac{\text{Mental Age (MA)}}{\text{Chronological Age (CA)}} \times 100$$

This formula, the “Ratio IQ,” became the gold standard for decades. It possessed a seductive mathematical elegance:

- A child functioning exactly at their age level ($MA = CA$) received an IQ of 100.

- A child functioning above their age ($MA > CA$) received an IQ > 100.

- A child functioning below their age ($MA < CA$) received an IQ < 100.

The widespread adoption of this metric was accelerated by World War I. Robert Yerkes, then president of the American Psychological Association, developed the Army Alpha and Beta tests to screen over 1.7 million recruits. These group-administered tests were rooted in the same mental age concepts, attempting to classify soldiers for officer training or discharge based on their “mental age” equivalents. This mass application solidified IQ testing in the American public consciousness but also sowed the seeds of controversy, as early results suggested the “average” American recruit had a shockingly low mental age (often cited as around 13 years), sparking debates about national intelligence and eugenics.

3. The Mathematical Mechanics of Mental Age Calculation

To truly comprehend the historical application of mental age, one must understand the specific psychometric protocols used to derive it. It is not merely a matter of answering questions; it is a structured process of establishing cognitive boundaries known as the Basal and the Ceiling.

3.1 The Basal-Ceiling Protocol

In the classic Stanford-Binet administration, the examiner does not simply ask all questions. Testing is adaptive.

- Establish the Basal Age: The examiner begins at an age level where the child is expected to succeed (often a year below their chronological age). If the child passes all items at that level, this is their Basal Age. It is statistically assumed that the child possesses all cognitive skills below this level.

- Proceed Upward: The examiner continues to the next age level. The child may answer some, but not all, items correctly.

- Establish the Ceiling Age: The examiner continues until a level is reached where the child fails all items. This is the Ceiling Age. It is assumed the child has no cognitive skills above this level.

Hypothetical Calculation Scenario:

Consider a child, Subject A, who has a Chronological Age (CA) of 10 years and 0 months (120 months).

- Basal: Subject A answers all items correctly at the 9-year level.

- Basal Mental Age = 9 years (108 months).

- Year 10 Level: Subject A answers 4 out of 6 items correctly.

- Each item at this level represents 2 months of credit ($12 \text{ months} / 6 \text{ items} = 2$).

- Credit = 8 months.

- Year 11 Level: Subject A answers 2 out of 6 items correctly.

- Credit = 4 months.

- Year 12 Level: Subject A answers 0 items correctly (Ceiling).

- Total Mental Age Calculation:

$$108 \text{ (Basal)} + 8 \text{ (Year 10)} + 4 \text{ (Year 11)} = 120 \text{ months}$$ - Result: Subject A has a Mental Age of 120 months (10 years).

- IQ Calculation:

$$IQ = (120 / 120) \times 100 = 100$$

Now consider Subject B, also 10 years old (120 months), but advanced.

- Basal: Subject B answers all items correctly at the 11-year level.

- Basal MA = 132 months.

- Year 12 Level: 3 items correct (+6 months).

- Year 13 Level: 0 items correct.

- Total MA: 138 months (11 years, 6 months).

- IQ Calculation:

$$IQ = (138 / 120) \times 100 = 115$$

This mechanics of “partial credits” allowed for a granular assessment of development. However, this system contained a fatal flaw when applied to older adolescents and adults.

3.2 The Adult Dilemma and the Statistical Artifact

The Ratio IQ formula relies on the denominator (CA) increasing linearly with time. However, the numerator (MA) does not. Cognitive development, much like physical height, tends to plateau in late adolescence. A 16-year-old performs significantly better than a 10-year-old on cognitive tasks, but a 40-year-old does not necessarily perform “better” than a 30-year-old on raw developmental scales. In fact, on speeded tasks, they may perform worse.

If one were to apply the Ratio IQ formula to a 40-year-old man:

- Assume his mental performance peaked at age 16 (the standard ceiling used in early tests).

- $MA = 16$.

- $CA = 40$.

- $$IQ = (16 / 40) \times 100 = 40.$$

This result is clinically nonsensical. It would classify an average functioning adult as intellectually disabled simply because they continued to age chronologically. To patch this, early psychometricians arbitrarily capped the CA denominator at 16 or 18, regardless of the person’s actual age. However, this was a clumsy workaround.

Furthermore, a statistical artifact plagued the system: the standard deviation of mental age scores increased with age. The spread of scores for 6-year-olds was tighter than the spread for 12-year-olds. This meant that an IQ of 120 at age 6 was not statistically equivalent to an IQ of 120 at age 12, making longitudinal comparisons invalid. The Ratio IQ was essentially a “rubber ruler,” stretching and shrinking depending on the age of the subject.

4. The Modern Shift: Wechsler and Deviation IQ

The resolution to the “Adult Dilemma” came in 1939 with the publication of the Wechsler-Bellevue Intelligence Scale by David Wechsler. Wechsler, working at Bellevue Hospital in New York, realized that comparing adults to children was fundamentally flawed. He argued that adult intelligence should be measured by comparing an individual to their age-matched peers, not to a developmental curve.

4.1 The Mechanism of Deviation IQ

Wechsler abolished the Ratio IQ ($MA/CA$) and replaced it with Deviation IQ. In this system, raw scores on the test are converted to standard scores based on a normal distribution (the bell curve) for each specific age group.

- The Mean: The average score for any age group is set to 100.

- Standard Deviation: The spread is set to 15 points.

This mathematical transformation ensures that an IQ of 100 always means “average for your age,” whether you are 5, 25, or 75. It decoupled IQ from the concept of “mental age.”

- If a 70-year-old performs as well as the average 70-year-old, their IQ is 100.

- We do not need to ask if they have the “mental age” of a 20-year-old. We simply ask where they fall on the distribution of 70-year-olds.

Today, all major clinical intelligence tests—including the modern Stanford-Binet Fifth Edition (SB5) and the Wechsler Adult Intelligence Scale Fourth Edition (WAIS-IV)—use the Deviation IQ method. The term “mental age” has largely vanished from adult IQ reports, appearing only as a supplementary “age equivalent” score in specific subtests for descriptive purposes.

4.2 Divergent Constructs: g vs. Developmental Speed

Theoretical research supports this shift. Contemporary studies suggest that the mechanisms driving child development are different from those driving adult individual differences.

- Childhood: Intelligence growth is linked to the maturation of executive functions and working memory capacity. The brain is “growing” its hardware.

- Adulthood: Individual differences in g (general intelligence) are constrained by processing speed and the efficiency of neural networks. The hardware is built; the difference lies in how fast it runs.

This implies that “mental age” (a developmental metric) and “adult IQ” (an efficiency metric) are measuring related but distinct biological phenomena. Attempting to force adults into a mental age framework ignores the reality that adult cognition is characterized by stability and specialized knowledge (crystallized intelligence) rather than the rapid, broad acquisition of new structures seen in childhood.

5. Clinical Applications: Intellectual Disability and Adaptive Functioning

While “mental age” receded from general IQ testing, it found a permanent home in the diagnosis and description of Intellectual Disability (ID), formerly known as mental retardation. In this domain, the concept remains a crucial tool for communication and classification, though its application has evolved significantly under the DSM-5 and ICD-11.

5.1 Redefining Disability: Beyond the IQ Cutoff

Historically, an IQ score below 70 (two standard deviations below the mean) was the primary criterion for diagnosing intellectual disability. However, the Diagnostic and Statistical Manual of Mental Disorders, Fifth Edition (DSM-5) initiated a major paradigm shift. It de-emphasized strict IQ cutoffs in favor of assessing adaptive functioning—how well an individual handles the demands of everyday life.

This shift acknowledges that two people with the same IQ of 65 might function very differently. One might be gainfully employed and living independently (high adaptive functioning), while the other requires 24-hour care (low adaptive functioning).

5.2 Mental Age as a Descriptive Benchmark

Despite the move to adaptive functioning, mental age equivalents are still widely used by clinicians to convey the severity of a disability to parents, caregivers, and educators. It provides a tangible frame of reference. The following table illustrates the approximate correspondence between ID severity, IQ ranges, and adult mental age equivalents:

| ID Severity | IQ Range (Approx.) | Adult Mental Age Equivalent | Adaptive Capabilities & Characteristics |

| Mild | 50 – 70 | 9 – 12 Years | Can typically master academic skills up to a 6th-grade level. As adults, can work, maintain social relationships, and live independently with minimal support. |

| Moderate | 35 – 50 | 6 – 9 Years | Can communicate in simple language. Academic skills generally cap at 2nd-grade level. Can perform unskilled or semiskilled work with supervision. often live in group homes. |

| Severe | 20 – 35 | 3 – 6 Years | Limited communication, often restricted to single words or phrases. Can learn basic self-care (eating, dressing) but requires extensive lifelong support and supervision. |

| Profound | < 20 | < 3 Years | Significant limitations in sensorimotor functioning. Often nonverbal and dependent on caregivers for all aspects of daily life. High comorbidity with physical disabilities. |

5.3 The Divergence of Social and Mental Age

A critical nuance in clinical assessment is the frequent disconnect between Mental Age (cognitive ability) and Social Age (adaptive social competence). This distinction was formalized with instruments like the Vineland Social Maturity Scale (VSMS), developed by Edgar Doll in 1936 to measure “social competence” rather than just IQ.

The VSMS assesses eight domains, including self-help (eating, dressing), locomotion, communication, and socialization. It produces a Social Age (SA) and a Social Quotient (SQ). While research indicates a strong correlation (approx. 0.80) between SQ and IQ in the general population, specific clinical populations show marked divergence.

- Down Syndrome: Individuals often exhibit a “social advantage,” where their social age and adaptive skills exceed their cognitive mental age. They may be more socially engaged and charming than their IQ score would predict.

- Autism Spectrum Disorder (ASD): Conversely, individuals with ASD often show the reverse pattern. A child might have a high cognitive mental age (e.g., solving complex puzzles, reading early) but a significantly lower social age (unable to read social cues, poor self-care).

Recognizing this discrepancy is vital for treatment planning. A focus solely on raising “mental age” (cognitive drills) might ignore the more pressing need to raise “social age” (life skills), which is often the primary determinant of independence and quality of life.

5.4 Educational and Forensic Implications

In the educational sector, the concept of mental age has historically guided curriculum design. The “developmental hypothesis” posits that children with ID pass through the same cognitive stages as typical children, just more slowly and stopping sooner. This led to the practice of using materials matched to mental age—giving a 14-year-old with a mental age of 7 a first-grade reader.

However, this practice is increasingly scrutinized for failing to be chronologically age-appropriate. Critics argue that a 14-year-old, regardless of mental age, has gone through puberty, has different interests, and deserves materials that respect their physical maturity (e.g., high-interest/low-readability books) rather than materials designed for toddlers.

In the forensic arena, mental age plays a sobering role in determining culpability. Courts often consider whether a defendant with a low mental age can form mens rea (criminal intent) or comprehend judicial proceedings. The logic is that one would not hold a 9-year-old fully criminally responsible, so one should not hold an adult with a mental age of 9 to the full standard of the law. This application underscores the immense weight the mental age construct still carries in society, determining freedom and sentencing.

6. The Digital Renaissance: “Brain Age” and the Gamification of Cognition

While clinical psychology was refining and narrowing the use of mental age, the consumer technology industry exploded the concept into the mainstream under the moniker “Brain Age.” This shift transitioned the metric from a diagnostic tool to a lifestyle aspirational target.

6.1 The Nintendo Paradigm: Dr. Kawashima’s Revolution

The turning point was the 2005 release of Brain Age: Train Your Brain in Minutes a Day! for the Nintendo DS. Based on the work of Japanese neuroscientist Dr. Ryuta Kawashima, the game popularized the idea that the brain, like a muscle, could be “trained” to stay young.

Dr. Kawashima’s methodology for calculating “Brain Age” differed radically from Binet’s. It did not use developmental milestones. Instead, it relied almost exclusively on processing speed and accuracy.

- The Assessment: Users performed rapid-fire tasks: Devilish Calculations (remembering previous answers while solving new ones), Number Cruncher (rapid counting), and Stroop tests (naming ink colors of conflicting words).

- The Algorithm: The game compared the user’s reaction times to an “ideal” benchmark (established as 20 years old).

- The Score: Slow reaction times resulted in a high “Brain Age” (e.g., 60 or 80). Fast, accurate performance lowered the age toward the ideal 20.

The game claimed to increase blood flow to the prefrontal cortex. While commercially successful, the scientific consensus on its efficacy is nuanced. Studies confirm that playing the game improves performance on the game tasks (practice effects) and can boost processing speed. However, evidence for “far transfer”—the idea that playing Brain Age makes you better at managing finances or driving—is inconsistent. The “age” provided is less a valid psychometric measure and more a feedback mechanism for reaction time.

6.2 The App Ecosystem: Lumosity, Peak, and CogniFit

Following Nintendo, a wave of mobile apps emerged, each with its own proprietary scoring system attempting to quantify cognitive age.

- Lumosity: This platform utilizes the Lumosity Performance Index (LPI). Rather than a direct “age,” it creates a standardized score derived from millions of user data points. Interestingly, independent analyses have shown strong correlations ($r \approx 0.85$) between LPI and standardized academic tests like the SAT and ACT. This suggests that while gamified, these tasks do tap into g (general intelligence) and fluid reasoning, lending some validity to their metrics compared to simple entertainment quizzes.

- Peak: Developed in collaboration with neuroscientists from Cambridge and Yale, Peak aggregates performance into a “Brain Score” and provides a comparative “Brain Age”. It emphasizes “neurotiming” and coordination games.

- CogniFit: This platform adopts a more clinical aesthetic. It calculates a “Cognitive Age” by comparing a user’s 0-800 score against age-normed cohorts. If a 50-year-old scores 400 (the average for their age group), their cognitive age is 50. If they score 600 (the average for a 30-year-old), their cognitive age is reported as 30. This is essentially a Deviation IQ logic reverse-engineered into an age metric.

6.3 Thinkie and Biofeedback

A newer entrant, Thinkie, bridges the gap between gaming and biology. It uses a sensor (likely fNIRS based on descriptions of “activity” and color change) to measure brain activity directly during tasks. Users receive a “Brain Age” based on categories like mental speed and attention switching, but with the added layer of real-time biofeedback (warmer colors = higher activity). This represents the next evolution: measuring the effort the brain exerts, not just the output.

7. Neurobiological Perspectives: Structural BrainAGE

In the realm of rigorous neuroscience, “mental age” has found a new, biological definition known as BrainAGE (Brain Age Gap Estimation). This metric moves beyond performance tests entirely, looking directly at the anatomy of the brain.

7.1 Machine Learning and MRI

BrainAGE relies on structural Magnetic Resonance Imaging (MRI). Researchers train machine learning algorithms on thousands of scans from healthy individuals aged 5 to 90. The algorithm learns to identify the structural features associated with aging: cortical thinning, ventricular enlargement, and reduction in gray matter volume.

When a new subject is scanned, the algorithm predicts their age based solely on their brain structure.

- The Calculation: $\text{BrainAGE} = \text{Predicted Age} – \text{Chronological Age}$.

- Interpretation:

- Positive Gap: If a 50-year-old has a predicted brain age of 60, they have a +10 year gap. This suggests accelerated atrophy and is a risk factor for neurodegeneration.

- Negative Gap: If a 50-year-old has a predicted brain age of 40, they have a -10 year gap, suggesting neuroprotection or “youthful” brain structure.

7.2 Biomarkers and Early Life Determinants

The clinical utility of BrainAGE is profound. High BrainAGE scores have been shown to predict:

- Mortality: An older-looking brain is associated with a higher risk of death.

- Dementia: Accelerated brain aging often precedes cognitive symptoms of Alzheimer’s and MCI.35

- Physical Health: Conditions like diabetes and hypertension are correlated with higher BrainAGE.

However, recent research challenges the idea that BrainAGE only measures the rate of aging. Studies using longitudinal data (tracking the same people over time) vs. cross-sectional data suggest that “Brain Age” in adulthood is heavily influenced by early life factors. Factors like birth weight and polygenic scores (genetic profiles) set a “baseline” for brain structure. A person might have a “high” BrainAGE not because they are aging fast, but because their brain developed differently in utero. This nuance—distinguishing between atrophy and developmental baseline—is the current frontier of neuroimaging research.

8. The Socio-Economic Context of Cognitive Aging

Cognitive age is not merely a biological or psychometric fact; it is deeply influenced by lifestyle and environment. The “Use It or Lose It” hypothesis has been extensively studied in relation to occupation and technology.

8.1 Occupational Complexity and Education

Research consistently shows that individuals with higher educational attainment and more complex occupations maintain “younger” cognitive profiles for longer. Jobs involving complex data, people, or things (e.g., social work, data analysis) provide a “cognitive reserve.” This reserve buffers the brain against the pathology of aging. Even if the physical brain accumulates damage (like Alzheimer’s plaques), the high-functioning network allows the person to maintain a normal mental age for longer. Conversely, hazardous work environments can negate these benefits.

8.2 The Protective Effect of Technology

Contrary to the narrative of “digital dementia,” recent evidence suggests that technology use among older adults is protective. A meta-analysis published in Nature Human Behaviour found that regular use of computers and smartphones was associated with a 58% lower risk of cognitive impairment. Engaging with technology requires learning new skills, fine motor control, and visual-spatial processing—essentially a continuous, real-world “brain training” regimen.

9. The Wild West of Online Testing: Navigating Scams and Validity

The public demand to “know my mental age” has created a fertile ground for online scams and invalid tests. It is critical for users to distinguish between legitimate assessment and entertainment.

9.1 Red Flags for Invalid Tests

- Subjectivity: Tests that ask lifestyle questions (“Do you like loud music?”) rather than performance tasks are measuring personality, not cognition.

- The “Free” Bait-and-Switch: Many sites offer a “free” test but demand payment or credit card details to reveal the results. This is often a subscription trap.

- Instant Results: Valid cognitive testing takes time. A 5-minute quiz cannot accurately map the complexity of human intelligence.

9.2 Valid Remote Assessment

Legitimate online testing exists but looks different. Platforms like Creyos (formerly Cambridge Brain Sciences) offer digitized versions of classic neuropsychological tasks (e.g., Spatial Span, Token Search) used in clinical research. These are fee-based services often used by healthcare providers.

Furthermore, the COVID-19 pandemic accelerated the validation of tele-neuropsychology. Studies have shown that standard tests like the WAIS-IV can be administered remotely via video call with high validity, provided that “red flags” are managed. These technical red flags include:

- Distractions: A noisy environment or interruptions.

- Device Issues: Using a smartphone instead of a tablet/PC (screen size affects visual tasks).

- Connection Latency: Lags that interfere with timing-based tasks.

10. Conclusion

The concept of mental age is a testament to psychology’s evolving understanding of the human mind. It began with Binet as a compassionate attempt to help struggling children, was transformed by Terman and Stern into a rigid mathematical ratio that defined a generation, and was eventually deconstructed by Wechsler into the statistical deviation scores used today.

While the “Ratio IQ” calculation is obsolete in clinical diagnostics, the spirit of mental age persists. It lives on in the adaptive functioning assessments for intellectual disability, ensuring that support is defined by need rather than just a number. It thrives in the digital “Brain Age” market, motivating millions to engage in cognitive exercise, even if the “age” score is more metaphor than metric. And it has been reborn in the laboratory as BrainAGE, a powerful biological marker that may one day help us prevent neurodegeneration before it starts.

For the individual asking “how to calculate mental age,” the answer depends entirely on the purpose. For entertainment and motivation, an app’s processing speed algorithm suffices. For a diagnosis of disability, a comprehensive clinical evaluation of adaptive skills is required. And for a true understanding of brain health, the future lies in the convergence of lifestyle factors, cognitive reserve, and neuroimaging biomarkers. Intelligence, as Binet correctly surmised over a century ago, is too complex to be captured by a single number, but our attempts to measure it continue to illuminate the fascinating trajectory of human development.